How to backup/archive the whole website and page content, first you must check your QNAP Linux file system to recognize the mounted FS with enough disk space. For example, the following "df" command returns FS of my QNAP. The first one is the root (/, i.e. /dev/ram0, which is a ramdisk with only 32.9 MB space). If you run wget in this FS, you will get system message "insufficient ramdisk space!" soon. Obviously, we should run wget under the directory: "/share/HDA_DATA"

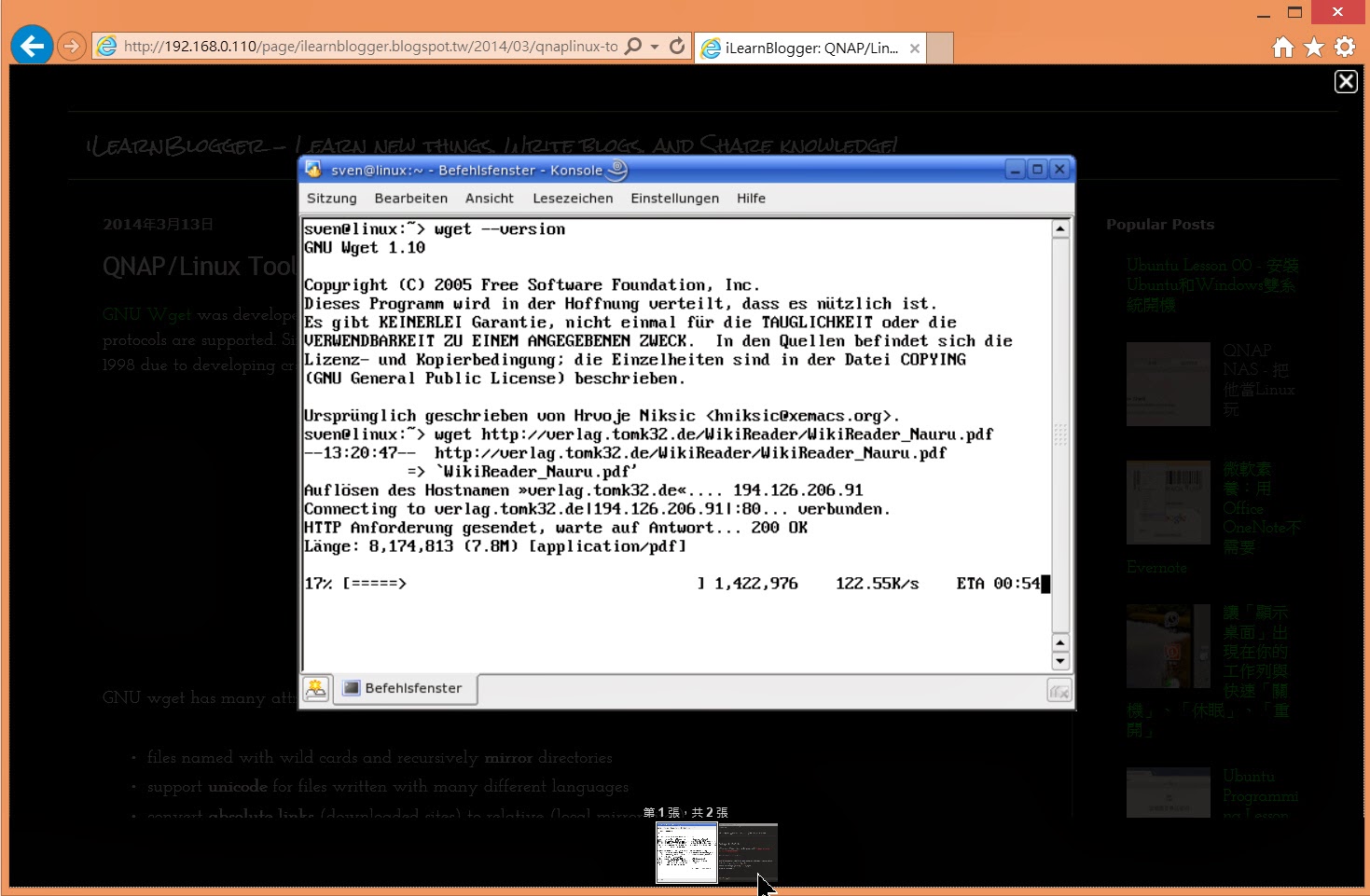

First, let's try to archive the whole page content (including images, icons, musics, etc.) under the directory that I created.

[/share/HDA_DATA/wgtest/page] # wget -E -H -k -K -p -U Mozilla http://ilearnblogger.blogspot.tw/2014/03/qnaplinux-tool-wget-1.html

The result consists the target directory "ilearnblogger.blogspot.tw/", in which the same directory structure speficied in the URL is maintained. Media contents presented in the page are also archived with the source <hostname>/<path> structures. After fetch the whole page content, links within the original html file was converted into relative path associated to the local FS. Therefore, the original html is renamed with .orig (qnaplinux-tool-wget-1.html.orig).

To realize the definition of each option, use grep to filter desired options out from "wget -h". Note that, \ is used for special char "|", the first "\" is to avoid ambiguity from search pattern started from "-". "|" (OR) is used to denote several patterns for extraction in the grep "pattern string".

Note: both

option (-) and suboption (--) are workable, but case-sensitive.

Option (e.g. -E) is for simple, and suboption (e.g. --html-extension) is for

human-readable.

-E

|

--html-extension save HTML documents

with `.html' extension.

|

-U

|

--user-agent=AGENT identify as AGENT instead of Wget/VERSION. Some

web server might reject robot-like browsers (wget is one of them), so that

you should mimic widely user

browsers (e.g. Chrome, IE) by speficying the browser

string (http://www.useragentstring.com/pages/Chrome/) to cheat the

website.

|

-k

|

--convert-links make links in downloaded

HTML point to local files. The URL of links, images, media contents, etc.

withing the original HTML file must be converted into relative paths associated to the local storage path.

|

-K

|

--backup-converted before converting

file X, back up the original HTML file X as X.orig.

|

-p

|

--page-requisites get all images,

etc. needed to display HTML page. I.e. Get all

the contents presented in the page.

|

-H

|

--span-hosts go to foreign hosts when

recursive.

|

|

| All blog links are relative to the local storage. |

|

| The image URL is also relative to the local storage. |

In this way, it is easy to backup/archive personal blogs and contents published with cloud and social network services. Wget options applied to cache the whole page content explained as follows.

As for mirror the whole site of my blog, it's easy to add the mirror option (-m or --mirror).

In this case, I mimic wget-robot as the newest Chrome browser. Consequently, my blog's total page views was increased since the command tries to read all articles of my browser with very quick speed. However, my wget request seems to be banned by the Google Blogger site soon, the the counter didn't increase more.

|

| Before run Chrome-minic wget, the counter: 18,268. |

|

| After run Chrome-minic wget for several second, the counter really increased. |

Let's check the result tomorrow!

No comments :

Post a Comment